Uncovering Anomalies in Transaction Data: A Deep Dive into Machine Learning Techniques

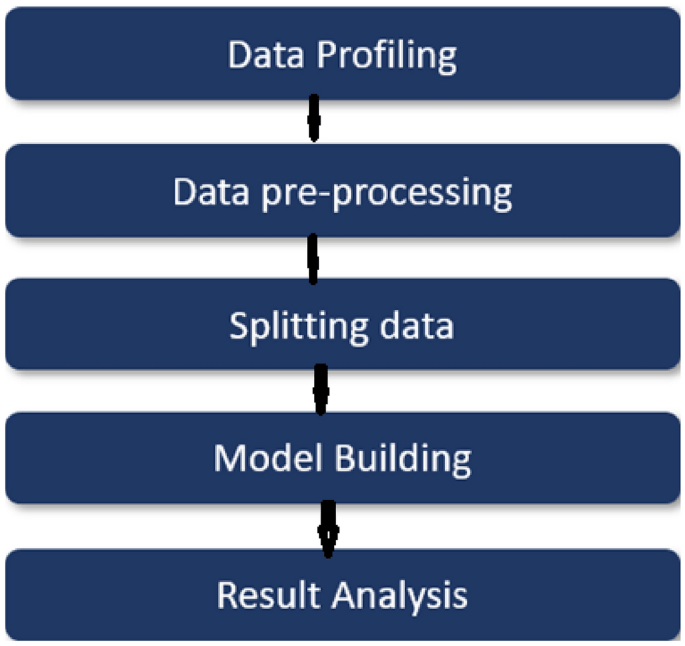

In the vast ocean of transactions that flow through financial systems daily, distinguishing between legitimate activities and fraudulent ones is a monumental challenge. Our primary objective is to identify anomalies—specifically, the illicit transactions that threaten the integrity of these systems—while ensuring that innocent civilians can continue their transactions without interruption. Achieving this requires sophisticated machine learning algorithms that minimize false positives while maintaining an acceptable rate of false negatives. In this article, we will explore various algorithms, including Random Forest, Logistic Regression, Graph Convolution Networks (GCN), Long Short Term Memory (LSTM), and Support Vector Machines (SVM), to tackle this pressing issue.

The Challenge of Anomaly Detection

Anomaly detection in transaction data is akin to finding a needle in a haystack. With millions of transactions occurring every day, the task is not only about identifying fraudulent activities but also about ensuring that legitimate transactions are not flagged incorrectly. This is where the balance between false positive and false negative rates becomes crucial. A high false positive rate can lead to unnecessary disruptions for innocent users, while a high false negative rate can allow fraudsters to slip through the cracks.

To effectively classify transactions as either licit or illicit, we employ a variety of machine learning algorithms, each with its strengths and weaknesses. Hyperparameter tuning, particularly the learning rate, plays a significant role in optimizing these models. We utilize techniques such as GridSearchCV to fine-tune our parameters, ensuring that our models perform at their best.

Logistic Regression: A Statistical Approach

Logistic Regression (LR) is a foundational supervised machine learning algorithm used for binary classification tasks. It operates on the principle of estimating the probability that a given input belongs to a particular category. The algorithm fits a sigmoid curve to the data, mapping any real-valued number into a value between 0 and 1, which represents the probability of the positive class.

The mathematical representation of logistic regression can be expressed as:

$$

\log{\left[ \frac{y}{1-y}\right]} = b_0 + b_1x_1 + b_2x_2 + \cdots + b_nx_n

$$

In our application, we achieved an impressive accuracy of 97.52% through careful hyperparameter tuning using GridSearchCV, demonstrating the effectiveness of this algorithm in classifying transactions.

Random Forest Classifier: Ensemble Learning at Its Best

The Random Forest algorithm is another powerful supervised learning technique that utilizes ensemble learning. By constructing multiple decision trees and aggregating their outputs, Random Forest enhances model accuracy and robustness. The process involves:

- Building trees based on selected data points from the training set.

- Specifying the number of decision trees to be created.

- Voting on the outputs of these trees, with the majority vote determining the final classification.

To optimize the model, we employ hyperparameter tuning techniques similar to those used in logistic regression, focusing on parameters like max_features and max_depth to refine our decision trees.

Support Vector Machine: Defining Decision Boundaries

Support Vector Machines (SVM) are another critical tool in our arsenal for anomaly detection. SVMs work by finding the optimal hyperplane that separates different classes in the feature space. This algorithm is versatile, capable of handling both linear and non-linear data distributions.

The strength of SVM lies in its ability to create a decision boundary that maximizes the margin between classes, thereby enhancing classification accuracy. This makes it particularly effective in distinguishing between legitimate and fraudulent transactions.

Long Short Term Memory Networks: Capturing Temporal Patterns

Long Short Term Memory (LSTM) networks are a specialized form of Recurrent Neural Networks (RNNs) designed to capture temporal dependencies in sequential data. In the context of transaction data, LSTMs can learn from the sequence of transactions over time, allowing for the identification of patterns that may indicate fraudulent behavior.

LSTMs consist of four interactive layers that manage the flow of information, enabling the model to remember important transaction details while discarding irrelevant data. This capability is crucial for detecting anomalies that may not be immediately apparent in isolated transactions.

Graph Convolutional Networks: Leveraging Graph Structures

In recent years, Graph Convolutional Networks (GCNs) have gained traction for their ability to process graph-structured data. Transactions can be represented as a graph, where nodes represent transactions and edges represent the flow of funds. This representation allows GCNs to capture complex relationships and dependencies within the data.

By constructing a graph from transaction data, we can apply GCNs to classify nodes as either licit or illicit. The architecture of GCNs enables them to learn from the local structure of the graph, making them particularly effective for fraud detection in financial networks.

Evaluation Metrics: Measuring Success

To assess the performance of our models, we utilize several evaluation metrics, including Precision, Recall, and F1 Score. These metrics provide a comprehensive view of how well our models are performing in identifying fraudulent transactions.

-

Precision measures the accuracy of positive predictions, calculated as:

$$

Precision = \frac{TP}{TP + FP}

$$ -

Recall assesses the model’s ability to identify all relevant instances, defined as:

$$

Recall = \frac{TP}{TP + FN}

$$ -

F1 Score combines both precision and recall into a single metric, offering a balanced view of model performance:

$$

F1 Score = \frac{2 \times Precision \times Recall}{Precision + Recall}

$$

Our findings indicate that the GCN model outperforms others, achieving an accuracy of 98.56%, underscoring its effectiveness in detecting fraudulent transactions.

Conclusion

The challenge of identifying fraudulent transactions amidst a sea of legitimate activities is a complex but critical task in today’s financial landscape. By leveraging a combination of machine learning algorithms—Logistic Regression, Random Forest, Support Vector Machines, Long Short Term Memory Networks, and Graph Convolutional Networks—we can enhance our ability to detect anomalies effectively. Through careful hyperparameter tuning and evaluation, we can ensure that innocent users are not disrupted while fraudsters are brought to justice. As technology continues to evolve, so too will our methods for safeguarding financial transactions, ensuring a secure environment for all users.